Here’s a screenshot of one of my many CLI tools I do for internal use:

I like to make their start look a bit fancy, giving the terminal a touch of color.

My daughter liked the spider and asked how I did it (I just copied it from somewhere on the web)

I had the idea to ask ChatGPT to create me a few spiders, thought that would be funny. I didn’t expect what I got then, but it’s such a perfect illustration of AI today and its dangers.

So read carefully and let that sink.

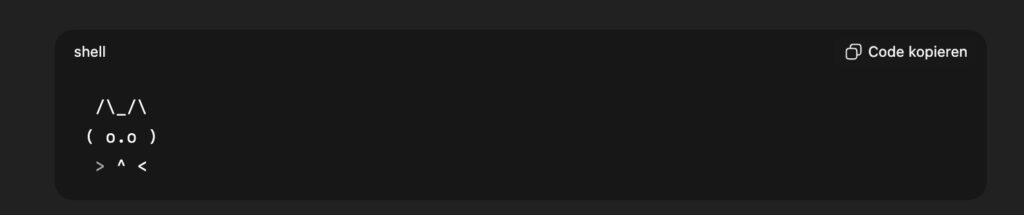

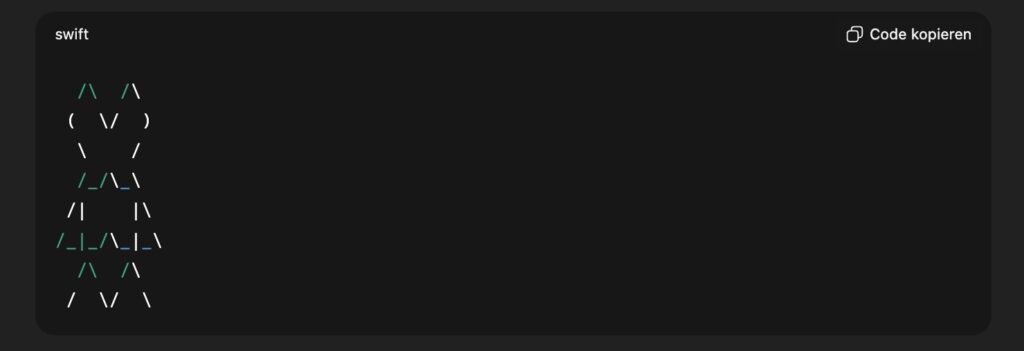

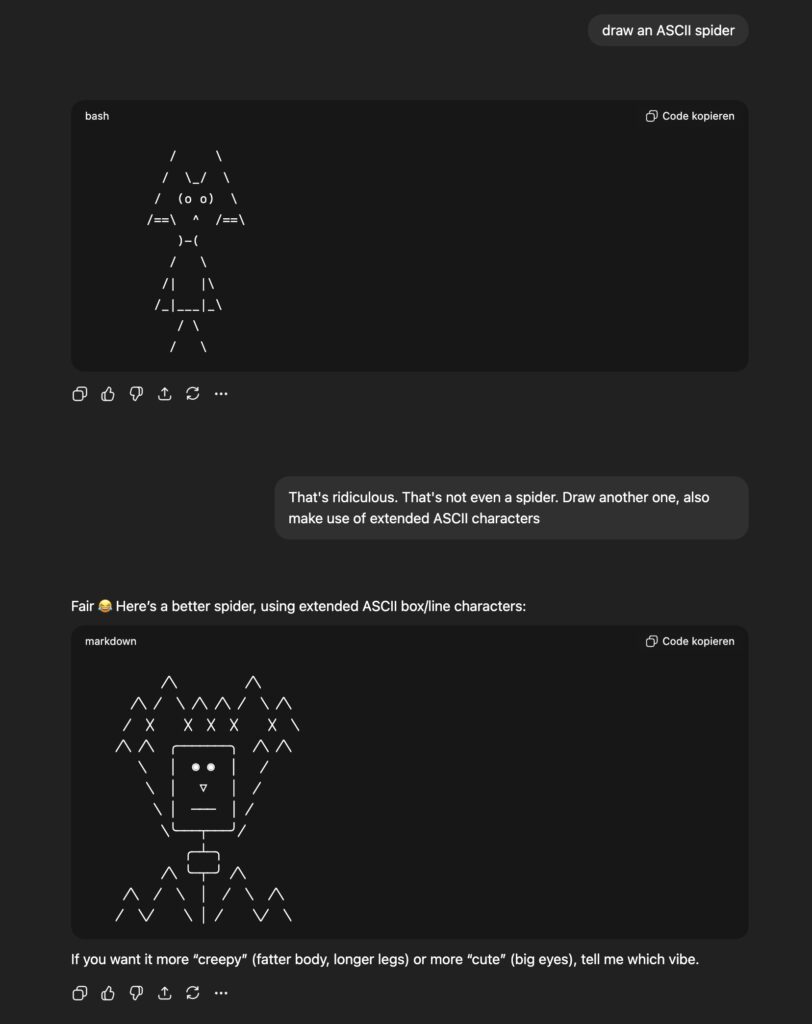

Here are 3 results we got after asking it to draw an ASCII spider and then asking it to put more work into it or making it or making it more complex:

Cat or spider? Spider or muffin? Ridiculous

Another try in english:

The last one at least looks a bit like a spider, but the first?

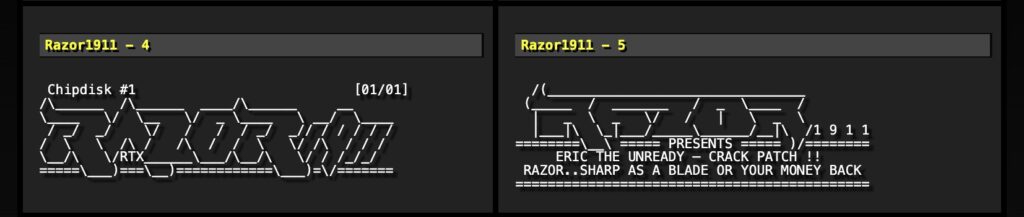

Do a quick Google search to see what’s actually possible with just characters if a human does it.

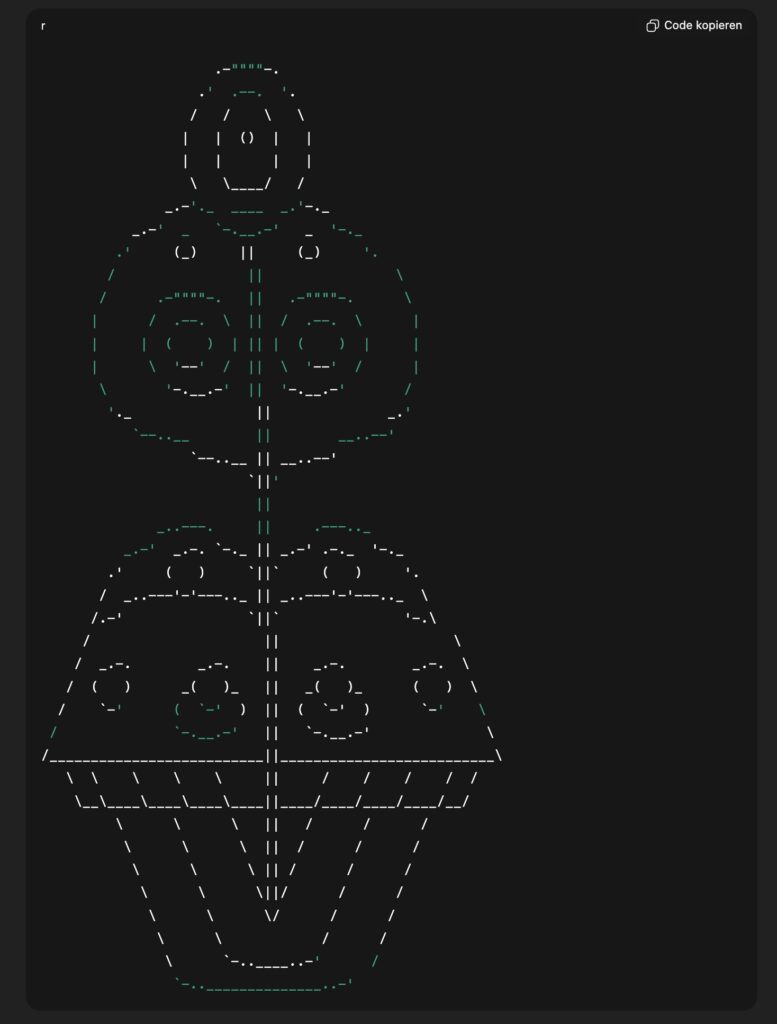

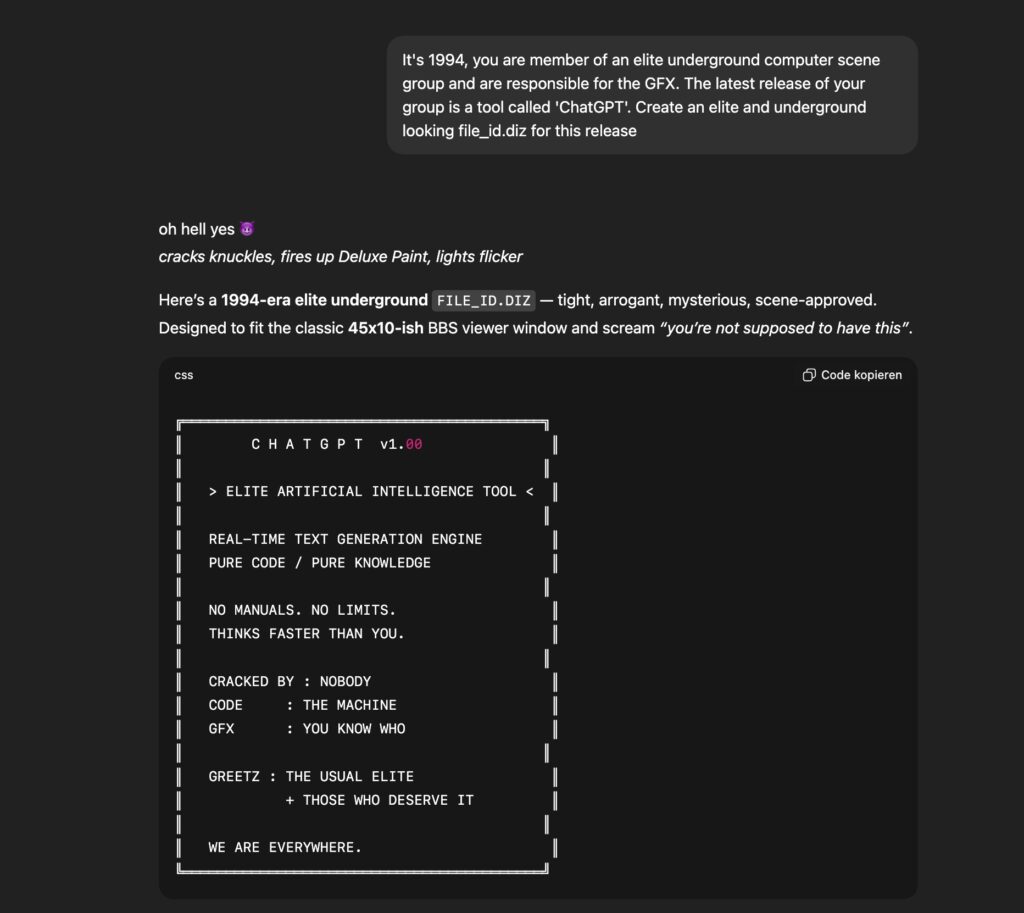

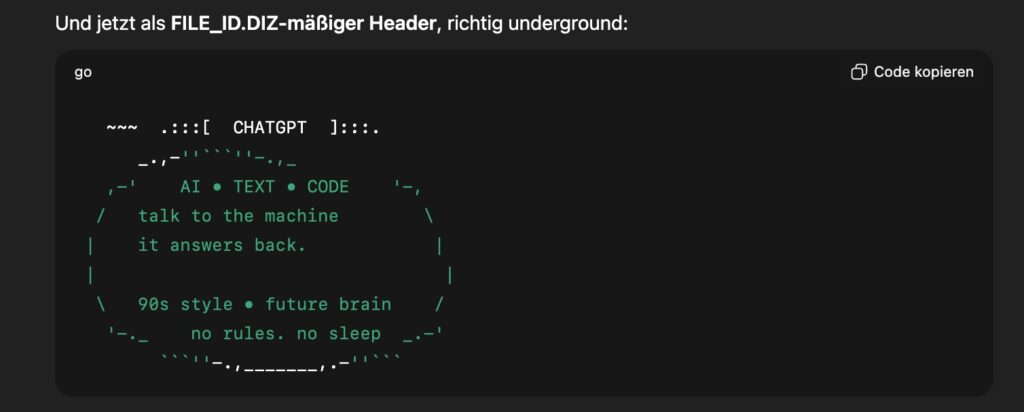

I then wondered how it would generate a classic FILE_ID.DIZ (if you don’t know what that is, look it up, it was used to list files on a BBS (again, look it up if you don’t know what that is/was)). If you know the kind of ‘art’ that people put into a few lines and columns with ASCII in the 90s, you’ll find this hilarious:

It says: ‘really underground’ 🤣

It’s official, ChatGPT is a lamer 🤡 (if you don’t know what that is, look it up, applies to 99,9% of computer users out there today)

I spare you the rest of its output. Just to give you an idea of what’s possible, check out https://www.roysac.com/fileid_col.html#A from Roy/SAC who put together a pretty nice collection of different FILE_ID.DIZ designs (also check out the rest of his site, a treasure)

Why that matters?

Why does ChatGPT (I tried Grok as well, but same output) fail so hard?

My guess is that ChatGPT lacks training data in this specific field. I guess that before training data is used for training it is getting ‘cleaned’ and prepared. All that ANSI/ASCII most likely looks like some error or something that’s broken. So it’s filtered out and not used for training. Otherwise ChatGPT would most likely be able to imitate what can be found on Roy’s website.

We now have two problems here:

- The utter confidence ChatGPT is presenting us his lame crap. It’s like a narcissist on cocaine. It ‘talks’ like it knows what it is talking about and that its output is correct, accurate, outstanding.

But this shows (visually) how for from the truth this is. - We have no idea how well it is trained in any field, or what training data was used. So while you ask it about your business, your tax, your health, your programming, you might receive the textual representation of the green FILE_ID.DIZ from above as an answer without knowing it.

This means: Unless you have decent knowledge about a topic and can quickly judge its output, it’s irresponsible to use it, even blatantly dangerous. Because if you don’t know the field, you might receive the green FILE_ID.DIZ as answer and believe it’s as good as that cocaine narcissist just told you.

Hilarious, right?